Architecture and Technical Innovations

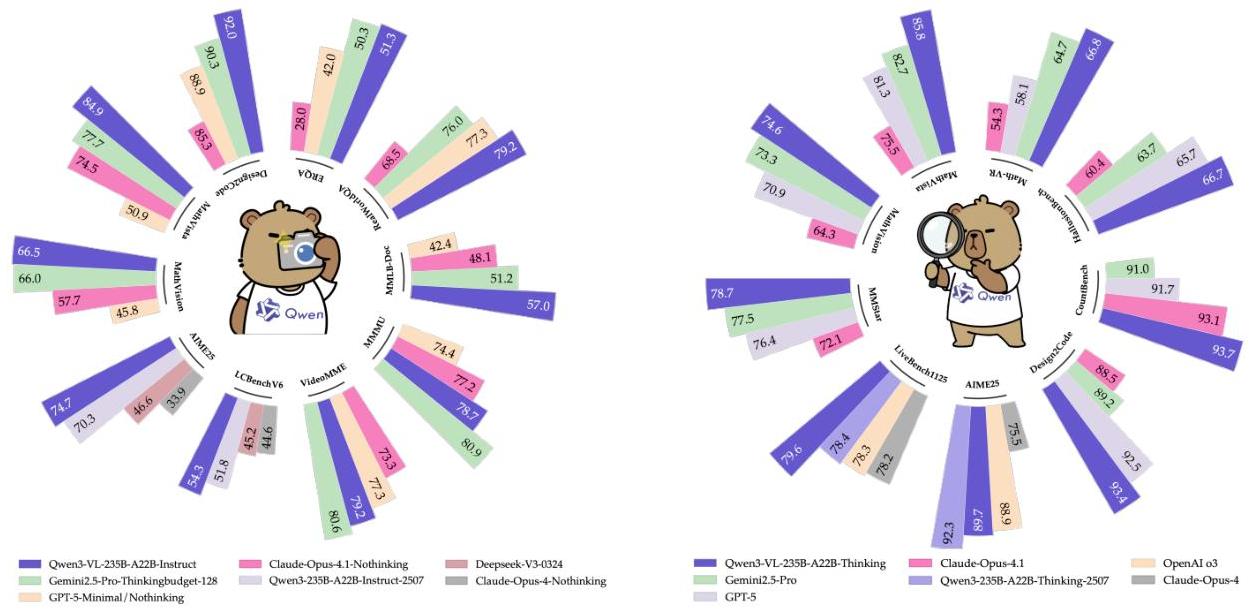

Performance comparison showing Qwen3-VL-235B-A22B variants achieving competitive or state-of-the-art results across diverse multimodal benchmarks

Performance comparison showing Qwen3-VL-235B-A22B variants achieving competitive or state-of-the-art results across diverse multimodal benchmarks

Qwen3-VL represents a significant advancement in multimodal AI from Alibaba’s Qwen Team, building upon their established Qwen series of language models. The system introduces three key architectural innovations designed to enhance multimodal understanding capabilities while preserving strong text-only performance.

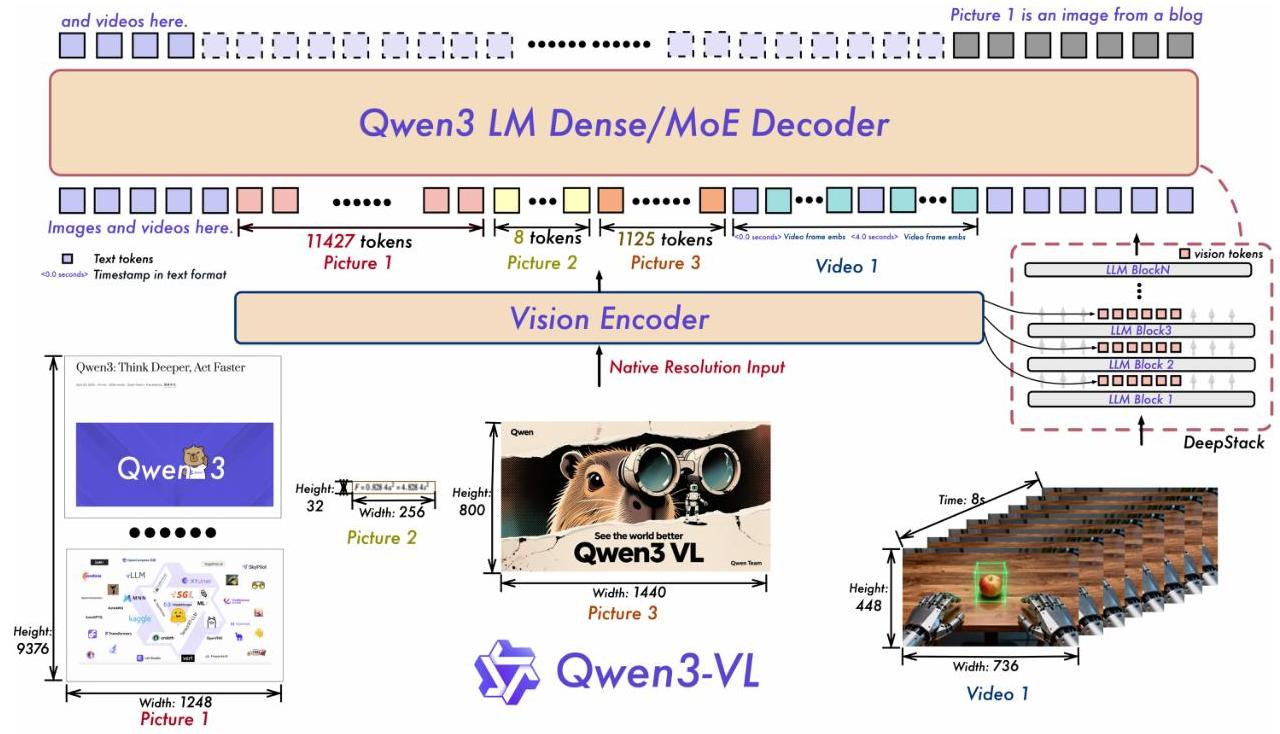

The model architecture consists of three primary components: a vision encoder based on SigLIP-2, an MLP-based vision-language merger, and Qwen3 language model backbones. The team provides multiple variants ranging from 2B to 235B parameters, including both dense models and Mixture-of-Experts (MoE) configurations to balance computational efficiency with performance.

Three core architectural improvements distinguish Qwen3-VL from previous iterations. Interleaved MRoPE redesigns positional encoding by uniformly distributing temporal, horizontal, and vertical components across embedding dimensions, addressing spectral bias issues in video understanding. DeepStack integrates multi-level visual features from intermediate Vision Transformer layers into corresponding language model layers, preserving rich visual information without increasing context length. Text-based Time Alignment replaces complex temporal positioning schemes with explicit textual timestamp tokens, providing more direct and precise temporal grounding for video content.

Training Pipeline and Data Curation

The training methodology employs a sophisticated multi-stage approach divided into pre-training and post-training phases. Pre-training consists of four progressive stages: vision-language alignment (67B tokens at 8K sequence length), multimodal pre-training (~1T tokens), long-context pre-training (32K sequences), and ultra-long-context adaptation (256K sequences with 100B tokens). This graduated approach allows the model to develop capabilities incrementally while scaling to unprecedented context lengths.

Post-training includes supervised fine-tuning with both standard and “thinking” variants, strong-to-weak distillation from teacher models, and reinforcement learning phases targeting reasoning accuracy and general instruction following. The “thinking” paradigm incorporates Chain-of-Thought reasoning and multi-turn interactions with tools, enabling more sophisticated agentic capabilities.

Data curation represents a comprehensive overhaul spanning multiple domains. The team assembled extensive multilingual datasets including 30M OCR samples across 39 languages, 7M parsed PDF documents, synthetic geometric diagrams for STEM reasoning, and large-scale GUI interaction data for agentic capabilities. Video data includes dense caption synthesis with temporal grounding, while code datasets encompass both traditional programming and multimodal coding tasks like UI-to-HTML conversion.

Performance Achievements

Architectural overview of Qwen3-VL showing the integration of vision encoder, multimodal processing, and language model components with native support for interleaved text, images, and video

Architectural overview of Qwen3-VL showing the integration of vision encoder, multimodal processing, and language model components with native support for interleaved text, images, and video

Qwen3-VL achieves state-of-the-art or highly competitive performance across numerous benchmarks. The flagship Qwen3-VL-235B-A22B-Thinking model scores 78.7 on MMStar and achieves leading results on mathematical reasoning benchmarks including MathVista (85.8), MathVision (74.6), and MathVerse (85.0). For document understanding, it sets new records on OCR-focused benchmarks and demonstrates superior long-document comprehension with 57.0% accuracy on MMLongBench-Doc.

The model excels in spatial understanding tasks, achieving 92.1 on RefCOCO-avg for 2D grounding and outperforming Gemini-2.5-Pro by 5.2 points on 3D grounding tasks. Video understanding capabilities are enhanced through the architectural improvements, with the 8B model matching the performance of the much larger Qwen2.5-VL 72B predecessor.

Notably, Qwen3-VL maintains or improves upon text-only performance while adding multimodal capabilities. The model demonstrates competitive results on pure language tasks, validating the team’s approach to preserving linguistic proficiency during multimodal training.

Long-Context Capabilities and Multilingual Support

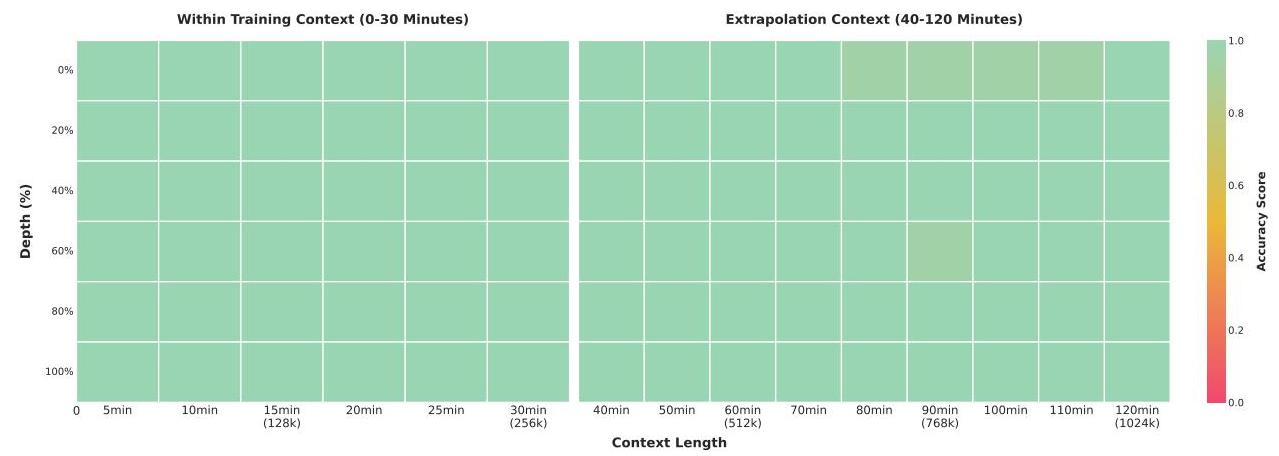

A defining feature of Qwen3-VL is its native support for 256K-token contexts with demonstrated extrapolation to 1M tokens. This capability enables processing of lengthy documents, extended video content, and complex interleaved multimodal sequences. The model maintains high accuracy in “needle-in-a-haystack” tests, achieving 100% accuracy for videos up to 30 minutes and 99.5% accuracy for content extending to 2 hours.

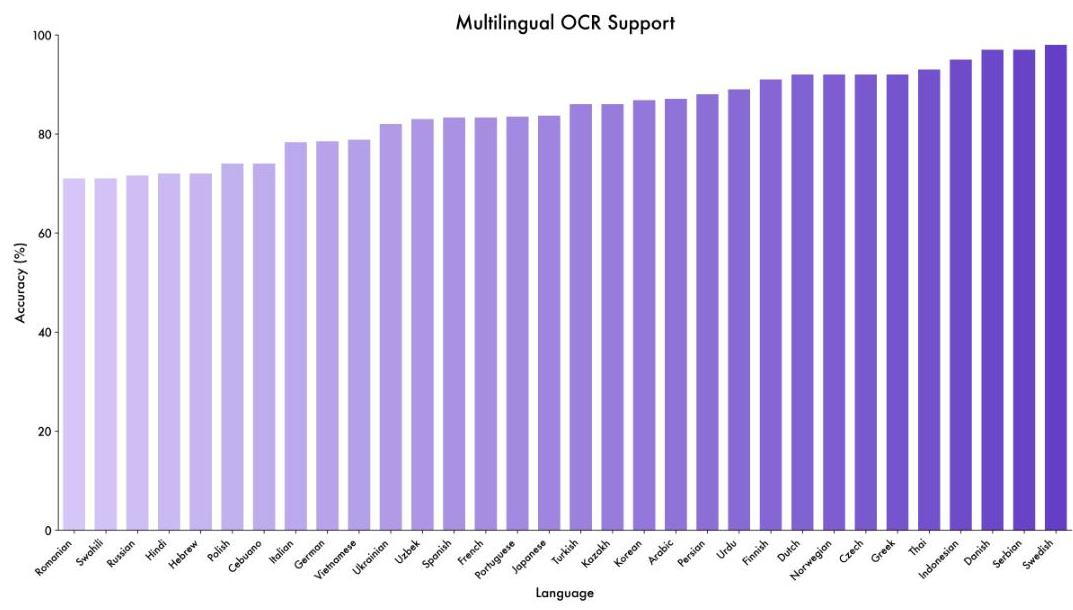

Qwen3-VL demonstrates strong multilingual OCR capabilities across 39 languages, with over 70% accuracy on 32 languages

Qwen3-VL demonstrates strong multilingual OCR capabilities across 39 languages, with over 70% accuracy on 32 languages

The multilingual capabilities extend across 39 languages for OCR tasks, with over 70% accuracy on 32 languages. This broad language support, combined with extensive multilingual training data, positions Qwen3-VL as a globally applicable multimodal system.

Context Extrapolation and Long-Video Understanding

Analysis showing Qwen3-VL’s maintained performance across extended context lengths, from training contexts (0-30 minutes) to extrapolation contexts (40-120 minutes)

Analysis showing Qwen3-VL’s maintained performance across extended context lengths, from training contexts (0-30 minutes) to extrapolation contexts (40-120 minutes)

The model’s context extrapolation capabilities represent a significant technical achievement. Training progresses from 8K tokens to 256K tokens across stages, with the final model demonstrating effective extrapolation to 1M tokens. This enables practical applications requiring analysis of very long videos, extensive documents, and complex multimodal narratives that were previously challenging for vision-language models.

Open Source Impact and Accessibility

Qwen3-VL is released under the Apache 2.0 license, making the entire model family freely available for research and commercial applications. This open-source approach accelerates community innovation while providing researchers access to state-of-the-art multimodal capabilities. The range of model sizes ensures deployment flexibility across different computational constraints, from edge devices to high-performance cloud environments.

The combination of superior performance, long-context understanding, multilingual support, and open availability positions Qwen3-VL as a foundational platform for advancing multimodal AI applications, particularly in areas requiring deep reasoning, document analysis, and agentic interactions with digital environments.